The Empty Promise of AI: Echoes of Edtech’s Past and the Creative Starvation of the Present

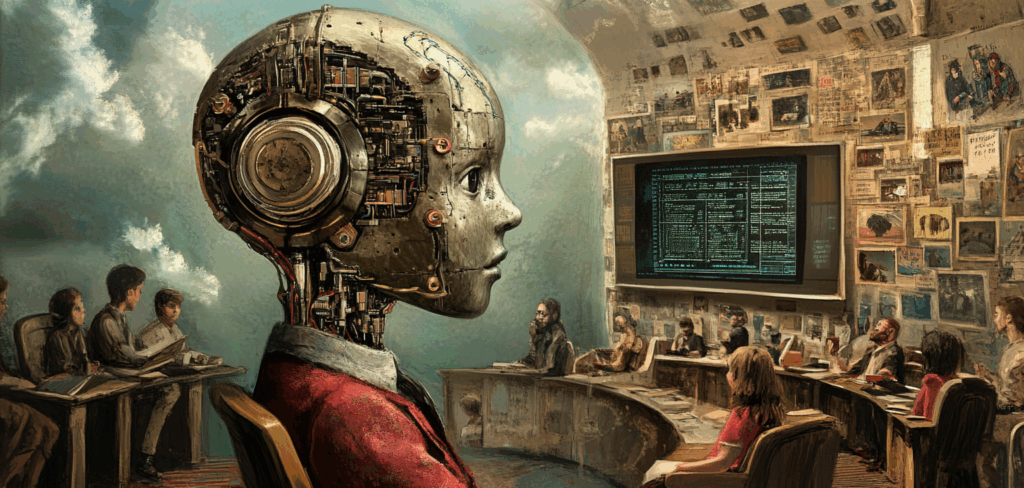

In recent years, artificial intelligence (AI) has taken center stage in conversations about the future of education and creative industries. Promising effortless automation, personalized instruction, and instant content generation, tech companies and venture-backed evangelists paint a seductive picture of a world where AI solves the very human problems of learning and creation. Yet for those who lived through the last two decades of educational technology “revolutions,” this utopian vision rings familiar – and hollow.

The promises surrounding AI in the classroom echo those made during the early 2000s push for interactive whiteboards (IWBs), which were marketed as transformative, turnkey tools that would revolutionize student engagement. In reality, their meaningful integration required significant teacher effort, redesign of pedagogy, and adaptation to the quirks of hardware and software. As with IWBs, the deeper problem lies not in the technology itself but in the illusion of simplicity. No tool, regardless of sophistication, can replace the cognitive, emotional, and cultural labor educators perform when connecting materials to meaningful learning.

This pattern of false promises has extended beyond education into the creative realm, where AI-generated content threatens to replace thoughtful craftsmanship with high-volume mimicry. The result is a cultural saturation – an aesthetic glut of formulaic, non-nutritive output. This phenomenon evokes a kind of “creative rabbit starvation,” where the abundance of derivative content paradoxically starves audiences and creators alike of substance, innovation, and risk. By replacing the visionary with the efficient, AI disempowers the very human impulse to push boundaries, to surprise, to make meaning.

Underlying both the educational and creative disruptions is a consistent pattern: elite technologists and billionaire entrepreneurs seeking to extract value from institutions that should be protected as human rights. Their tools, often developed without classroom experience or cultural accountability, are driven by a logic of control and efficiency. This same logic governs the attention economies of social media, which have already hollowed out the public sphere with dopamine-driven addiction and algorithmic feedback loops.

This essay explores the historical parallels, dystopian trajectories, and ethical consequences of current AI hype. It critiques the narrative of simplicity and progress used to justify AI’s rapid encroachment into education and the arts. By analyzing these developments through a critical, interdisciplinary lens, it seeks to reclaim the agency of educators and creators against a tide of techno-solutionism. To resist these empty promises is not to reject AI outright, but to demand more from its advocates: truth, context, and respect for the work that cannot – and should not – be automated.

Historical Parallel: Interactive Whiteboards and the Myth of Simplicity

The enthusiasm surrounding artificial intelligence in education today is not unprecedented. In the early 2000s, interactive whiteboards (IWBs) were introduced with similar fanfare, billed as revolutionary tools that would effortlessly transform classroom learning. Education departments, district leaders, and edtech vendors promised that IWBs would increase student engagement, facilitate digital learning, and save teachers time. The marketing narrative framed these tools as a leap into the future – a frictionless convergence of pedagogy and technology.

But for many educators, the reality was far more complex. Implementing IWBs required extensive lesson redesign, hours of extra planning, and a steep learning curve. Teachers needed to create or modify materials specifically for the boards, and many reported that using them meaningfully disrupted instructional flow rather than enhancing it. The presence of a digital tool did not inherently increase student understanding – it only magnified the need for purposeful, well-scaffolded design. In a comprehensive review, Higgins, Beauchamp, and Miller (2007) found that while IWBs had potential benefits, “the effectiveness of the IWB is dependent upon the pedagogy of the teacher” and that the effort required to integrate them meaningfully was consistently underestimated (Higgins et al., 2007).

This pattern – a tool that promises ease but demands greater complexity – should be familiar. IWBs did not fail because they were ineffective by nature, but because they were implemented with a fantasy of simplicity. They serve as a cautionary tale: technology in education amplifies teacher capacity only when embedded in thoughtful, context-driven pedagogy. Without that, even the flashiest innovation becomes a burden, not a boon.

The current AI wave follows this same arc but at a faster and more chaotic pace. The technologies change almost weekly, making it difficult for educators to establish routines or reliable strategies. The underlying myth remains: that tools can replace the time, creativity, and care it takes to truly teach. What IWBs proved slowly over years, AI is proving overnight.

The Dystopia of Ready Player One Feels Closer Than Ever

When Ernest Cline’s Ready Player One was published in 2011, its vision of a society escaping into a virtual reality education system seemed fantastical. In the novel, students log into the OASIS – a fully immersive metaverse – for school, social interaction, and entertainment. Education in the OASIS is self-paced, gamified, and depersonalized. At the time of publication, this dystopian portrayal functioned as a thought experiment: a warning about the seductive dangers of techno-utopianism. Now, it reads more like a forecast.

In the wake of the COVID-19 pandemic, virtual learning platforms became widespread. Edtech companies rapidly scaled, pitching remote instruction as both necessary and efficient. Today, some school districts continue to experiment with hybrid or fully online models, while others layer AI-generated lesson plans and assessments onto traditional classrooms. In the name of “scalability,” educational experiences are increasingly mediated by algorithms. The logic of the OASIS is no longer fiction – it’s policy.

As LitCharts notes in its thematic analysis, the world of Ready Player One presents a society “so broken and dystopian that it turns to immersive technology as its primary means of escape” (LitCharts, n.d.). This is not just escape, but abdication. Education becomes an individual pursuit of points, badges, and correct answers rather than a shared act of inquiry, care, and personal growth.

The parallels to AI-driven learning platforms today are striking. Platforms advertise adaptive quizzes, instant feedback, and “automated engagement,” minimizing the role of the teacher to a content curator or proctor. While marketed as efficient, these systems make invisible the immense labor of instructional design. They also threaten to collapse the human relationships at the heart of learning – replacing mentorship and dialogue with metrics and heat maps. These developments amplify the worst tendencies of education-as-transaction, steering classrooms further away from their transformative potential.

If Ready Player One once seemed an exaggerated metaphor, it now feels like a documentary in the making. What Cline imagined as a warning has instead become a blueprint for edtech entrepreneurs who conflate gamification with pedagogy and profit with progress.

The Myth of AI as a Simplifying Force in Education

The marketing narrative around artificial intelligence (AI) in education hinges on a seductive promise: that teaching can be made easier, faster, and more efficient through automation. “Smart grading,” “AI tutors,” and “adaptive assessments” promise to lift the burden from overworked educators. But just as interactive whiteboards demanded new pedagogical labor behind their sleek interfaces, effective use of AI in education demands even more – more planning, more oversight, and more understanding of complex human-machine dynamics.

The illusion that AI simplifies education is fueled by the language of edtech marketing, which tends to emphasize effortlessness over substance. In reality, tools like ChatGPT or AI lesson planners can generate surface-level content quickly, but their use in meaningful instruction often requires deep editing, contextual adaptation, and ongoing monitoring for accuracy and bias. Educators must critically assess whether AI-generated materials align with curriculum standards, student needs, and ethical considerations. This work is not “offloaded” by AI – it is shifted, obscured, and intensified.

Frameworks like SAMR (Substitution, Augmentation, Modification, Redefinition) and TPACK (Technological Pedagogical Content Knowledge) make clear that the value of technology integration is determined not by the sophistication of the tool, but by how it is meaningfully embedded within pedagogy. The SAMR model, developed by Dr. Ruben Puentedura, categorizes four different degrees of classroom technology integration, ranging from simple substitution to complete redefinition of tasks, emphasizing that technology should transform learning experiences rather than just enhance them . Similarly, the TPACK framework, introduced by Mishra and Koehler, identifies the intersection of technological, pedagogical, and content knowledge as essential for effective technology integration in teaching .

Yet the current pace of AI development makes such intentionality difficult. Large language models (LLMs) evolve rapidly, with major functionality changes rolling out every few months. This instability prevents long-term planning and forces educators into a state of constant recalibration. The same lesson plan that worked last month may now behave differently in the hands of an updated AI assistant. As a result, rather than simplifying instruction, AI adds new layers of complexity – demanding technical fluency, critical literacy, and pedagogical agility just to keep up.

More troublingly, the allure of “smart” systems obscures the relational nature of learning. Education is not simply the transmission of knowledge, but the co-construction of understanding between human beings. It requires trust, empathy, cultural nuance, and the kind of intuition that no algorithm can replicate. When AI is positioned as a replacement rather than a support, the essence of teaching is at risk.

The belief that AI will simplify teaching is not just misguided – it’s dangerous. It underestimates the complexity of human learning, undervalues the expertise of educators, and invites the substitution of rich instructional practice with brittle, untested automation.

The “Creative Rabbit Starvation” of AI-Generated Content

In survival scenarios, “rabbit starvation” refers to malnutrition resulting from consuming only lean meat without sufficient fat, leading to a paradox of eating plenty yet starving. This metaphor aptly describes the current deluge of AI-generated content flooding digital platforms – a phenomenon where an overabundance of low-quality, derivative material leaves audiences creatively malnourished.

The term “AI slop” has emerged to characterize this influx of subpar, machine-generated content. On platforms like Medium, analyses have revealed that nearly half of recent posts may be AI-generated, often lacking originality and depth . Similarly, defunct media outlets have been resurrected and filled with AI-produced articles, diluting the quality of information available .(WIRED, WIRED)

This saturation isn’t limited to written content. The music industry faces a similar challenge, with AI-generated compositions flooding streaming platforms. A notable case involves a $10 million fraud scheme where AI-created songs were streamed en masse to generate royalties, highlighting concerns about authenticity and the devaluation of genuine artistry .(WIRED)

The proliferation of AI-generated content raises critical questions about the future of creativity. While AI can assist in content creation, overreliance on it risks overshadowing human voices and diminishing the richness of cultural expression. As audiences become inundated with formulaic outputs, the space for innovative, thought-provoking work shrinks, leading to a homogenized digital landscape.

To preserve the integrity of creative industries, it’s imperative to recognize and address the implications of this “creative rabbit starvation.” Encouraging responsible AI use, promoting human-centric content, and implementing measures to distinguish and prioritize authentic creations are essential steps toward maintaining a vibrant and diverse cultural ecosystem.(Logically Facts)

Tech Billionaires and the Commodification of Education

In recent decades, a cadre of tech billionaires has increasingly influenced public education policy, often under the guise of philanthropy and innovation. While their investments are frequently portrayed as altruistic efforts to reform and improve educational systems, critics argue that these initiatives often prioritize market-based solutions over equitable, community-driven approaches.

For instance, Mark Zuckerberg’s $100 million donation to Newark’s public schools in 2010 aimed to transform the district into a national model for education reform. However, the initiative faced significant challenges, including top-down mandates without sufficient community engagement, leading to public backlash and skepticism about the reform’s sustainability (The New Yorker).

Similarly, the Bill & Melinda Gates Foundation has invested billions in education reform, supporting initiatives like the Common Core State Standards and charter school expansion. Despite these substantial investments, some of the foundation’s educational programs have faced criticism for not achieving desired outcomes and for promoting policies that may marginalize educators and students (Wikipedia).

These examples highlight a broader trend where wealthy individuals and organizations exert significant influence over public education, often without adequate input from educators, parents, and communities. This dynamic raises concerns about the privatization of education and the potential erosion of democratic decision-making in public schools.

Moreover, the push for school voucher programs, heavily funded by affluent donors, has sparked debates about the redirection of public funds to private institutions. In Texas, for example, a $1 billion voucher program backed by billionaires like Jeff Yass and the Wilks brothers has been criticized for potentially undermining public education and favoring wealthier families (Houston Chronicle).

Critics argue that these market-driven reforms often fail to address the root causes of educational disparities, such as poverty and systemic inequality. Instead, they may exacerbate existing issues by diverting resources from public schools and promoting standardized, one-size-fits-all solutions that do not account for the diverse needs of students.

While the involvement of tech billionaires in education reform brings substantial financial resources and attention to pressing issues, it also raises critical questions about accountability, equity, and the true beneficiaries of such initiatives. Ensuring that educational policies are shaped by inclusive, community-centered processes is essential to uphold the integrity and democratic foundations of public education.

Resisting the Myth of Effortless Progress

The promise of AI as a panacea for education and creativity echoes loudly – but emptily – across today’s cultural and pedagogical landscape. Like the interactive whiteboards of the early 2000s, AI tools are introduced with confident marketing and lofty claims, only to require more effort, more adaptation, and more labor to integrate meaningfully. Meanwhile, in classrooms and creative fields alike, a glut of machine-generated content overwhelms audiences, threatens human originality, and confuses novelty with innovation.

This is not merely a matter of technology failing to meet expectations. It is the deliberate outcome of a cultural paradigm where convenience and speed are prized above depth and quality. The educational vision of Ready Player One – once imagined as science fiction – is dangerously close to reality, as schools lean into adaptive learning software and tech entrepreneurs sell scalable “solutions” that reduce teaching to algorithmic triage. In this model, education becomes data, and educators become system operators.

The consequences extend beyond pedagogy into the creative sphere, where the metaphor of “creative rabbit starvation” captures the chilling effect of AI-generated abundance. Content platforms are increasingly saturated with polished, predictable, derivative material that drowns out the rare voices still striving to say something new. This is not a liberation of creativity – it is its dilution.

Underlying all of this is a deeper structural force: the increasing control of public goods by private interests. The same billionaire technocrats who created addictive social media ecosystems now claim to rescue the schools and redefine the arts. Their philanthropic interventions often serve extractive purposes, reshaping institutions of learning and culture into marketplaces, and reorienting public attention around systems of monetized engagement.

But tools are not destiny. AI is not inherently a force for harm. Its effects depend on how – and by whom – it is deployed. The real danger lies not in the code but in the myth that technology alone can replace the profoundly human work of education and creation. To defend that work, we must reject the seduction of frictionless futures. Instead, we must invest in the difficult, messy, necessary labor of teaching, making, thinking, and imagining.

In doing so, we reclaim a vision of progress that is not about speed, but about care. Not about efficiency, but about meaning. Not about replacement, but about reinvention. AI may play a role in that future – but it cannot lead the way.

References

- Higgins, S., Beauchamp, G., & Miller, D. (2007). Reviewing the literature on interactive whiteboards. Learning, Media and Technology, 32(3), 213–225. https://voiceofsandiego.org/wp-content/uploads/app/pdf/whiteboards.pdf

- LitCharts. (n.d.). Utopia vs. Dystopia Theme in Ready Player One. https://www.litcharts.com/lit/ready-player-one/themes/utopia-vs-dystopia

- Taylor Institute for Teaching and Learning. (n.d.). SAMR and TPACK: Two models to help with integrating technology into teaching and learning. https://taylor-institute.ucalgary.ca/resources/SAMR-TPACK

- PowerSchool. (2023). SAMR model: A practical guide for K–12 classroom technology integration. https://www.powerschool.com/blog/samr-model-a-practical-guide-for-k-12-classroom-technology-integration

- Educational Technology. (n.d.). Technological pedagogical content knowledge (TPACK) framework. https://educationaltechnology.net/technological-pedagogical-content-knowledge-tpack-framework

- Wired. (2024, April). AI Slop is Flooding Medium. https://www.wired.com/story/ai-generated-medium-posts-content-moderation

- Wired. (2024, April). AI Bots Are Gaming the Music Industry. https://www.wired.com/story/ai-bots-streaming-music

- Wired. (2024, May). Zombie Alt-Weeklies Are Stuffed With AI Slop. https://www.wired.com/story/zombie-alt-weeklies-are-stuffed-with-ai-slop-about-onlyfans

- Hettleman, K. (2024, April 15). Can billionaires save public education? Maryland Matters. https://marylandmatters.org/2024/04/15/kalman-hettleman-can-billionaires-save-public-education

- Houston Chronicle. (2024). Opinion: House right to vote down school vouchers. https://www.houstonchronicle.com/opinion/editorials/article/school-vouchers-house-vote-20281444.php

Leave a Reply